The AI Readiness Pack

Build the Confidence, Controls, and Capability to Use AI Safely

Artificial intelligence is moving faster than legal, risk, and governance frameworks were designed to handle. Many organisations are already using AI (whether formally approved or not), creating silent exposure around data, intellectual property, client confidentiality, and accountability.

The AI Readiness Pack is a practical, defensible foundation that enables law firms and businesses to adopt AI with confidence. It puts the legal, governance, and behavioural guardrails in place so AI becomes an asset, not a liability.

Why AI Creates Risk Faster Than Most Organisations Realise

AI adoption is rarely a single decision. It happens organically — through individual tools, browser-based assistants, document drafting, research, and experimentation.

Without a structured framework, organisations face:

Unclear ownership of AI‑generated intellectual property

Accidental exposure of client or confidential data

Inconsistent and unsafe AI use across teams

No defensible position with clients, regulators, or insurers

Staff uncertainty about what is allowed — and what isn’t

AI readiness isn’t about stopping innovation. It’s about making innovation safe, scalable, and trusted.

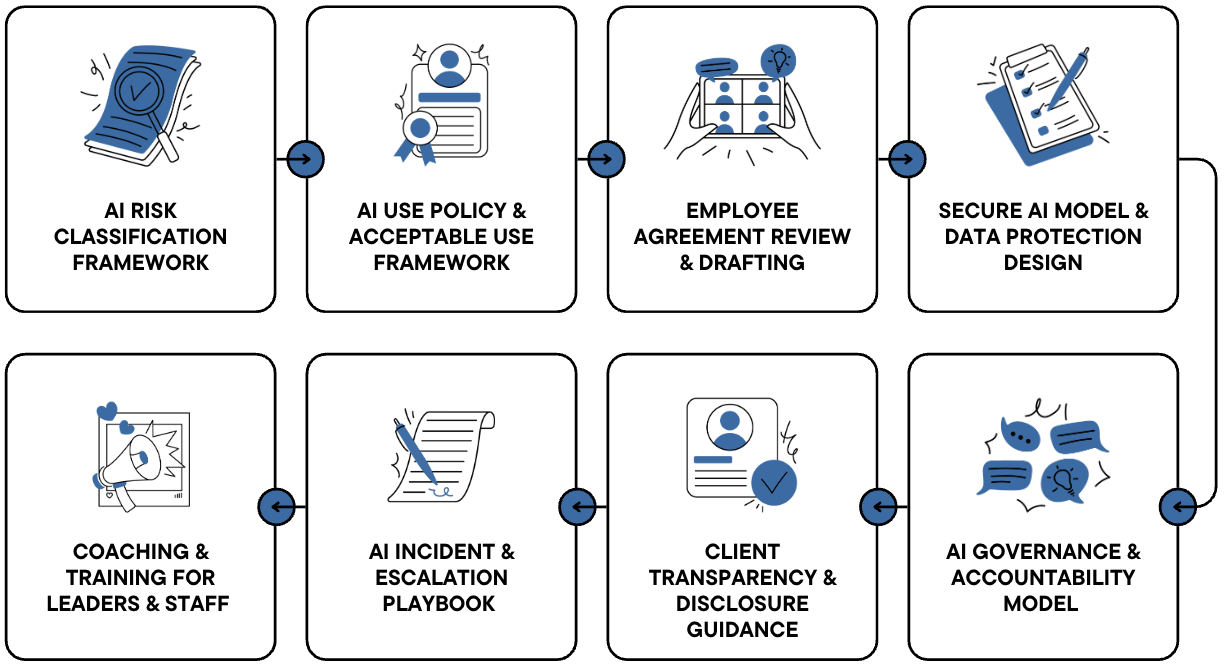

The AI Readiness Pack

The AI Readiness Pack is a structured, end‑to‑end enablement program that establishes the legal, technical, and human foundations required to use AI safely.

It combines policy, contracts, governance, secure technology configuration, and hands‑on coaching to ensure AI use is controlled, transparent, and aligned to your risk appetite. This is not a theoretical policy pack. It is an operating foundation backed by our legal team.

What’s Included

-

We review and update employment agreements to ensure they reflect modern AI realities, including:

Clear obligations for safe and compliant AI use

Explicit ownership of AI‑generated intellectual property

Protection of client data, confidential information, and trade secrets

Alignment with professional, ethical, and regulatory obligations

Outcome: Your firm owns the outputs, protects clients, and removes ambiguity before it becomes a dispute.

-

We design a practical, plain‑English AI Use Policy that works alongside your IT and security policies.

The policy includes:

Clear definitions of AI and AI‑assisted tools

Examples of approved, restricted, and prohibited use cases

Guidance on where a human‑in‑the‑loop is mandatory

Rules for handling client data, confidential information, and prompts

Expectations for accuracy, verification, and professional judgment

Outcome: Staff know exactly how AI can (and cannot) be used in practice.

-

We introduce a simple but powerful risk framework that classifies AI use by risk level rather than technology.

Typically covering:

Low‑risk (administrative, formatting, summarisation)

Medium‑risk (drafting support, research assistance)

High‑risk (client‑facing outputs, advice‑adjacent work)

Prohibited use cases

Outcome: Partners and teams have a shared lens for making safe, consistent decisions.

-

Where required, we help establish or configure an AI model and usage approach that protects sensitive data.

This includes guidance on:

Preventing client data from being used to train public models

Data isolation and access controls

Tool configuration aligned to your risk profile

Outcome: AI can be used productively without exposing client or firm data.

-

We define how AI decisions are owned and governed within your organisation.

This includes:

Clear accountability for AI oversight

Approval pathways for new AI use cases

Escalation processes for issues or incidents

Ongoing review and policy refresh mechanisms

Outcome: AI risk is actively governed.

-

We provide guidance on how to communicate AI use with clients when appropriate, including:

When disclosure is required or advisable

How AI use is framed in engagement letters or proposals

Consistent language for responding to client questions

Outcome: Client trust is strengthened, not eroded, by AI adoption.

-

We deliver a practical response guide for when AI‑related issues arise.

Covering:

What constitutes an AI incident

Immediate response steps

Internal escalation and decision‑making

Client communication principles

Outcome: Your team knows exactly what to do when something goes wrong.

-

Policies don’t work unless people understand them.

We deliver targeted coaching and training sessions to:

Explain the why behind the rules

Walk through real‑world scenarios and edge cases

Build confidence in safe, effective AI use

Reduce shadow AI usage driven by uncertainty

Outcome: Adoption is deliberate, confident, and consistent across the organisation.

Client Confirmed Value

AI is reshaping how work is done, whether organisations are ready or not. This pack ensures you can:

Reduce legal, regulatory, and reputational risk

Protect client confidentiality and intellectual property

Enable innovation without losing control

Demonstrate maturity to clients, insurers, and regulators

Build a foundation that scales as AI evolves

AI readiness is not just about compliance. It’s about confidence.

Who is this for?

The AI Readiness Pack is designed for:

Law firms adopting or already experimenting with AI

In‑house legal teams supporting broader business AI use

Professional services firms handling sensitive client data

Leadership teams seeking a defensible AI position

Why Choose Everingham Legal

Everingham Legal sits at the intersection of law, technology, and operations.

We design systems that work in the real world. Our approach is:

Practical: Built for how professionals actually work

Independent: Vendor‑neutral and risk‑focused

Human‑centred: Designed to amplify judgment, not replace it

We help organisations move forward with clarity.